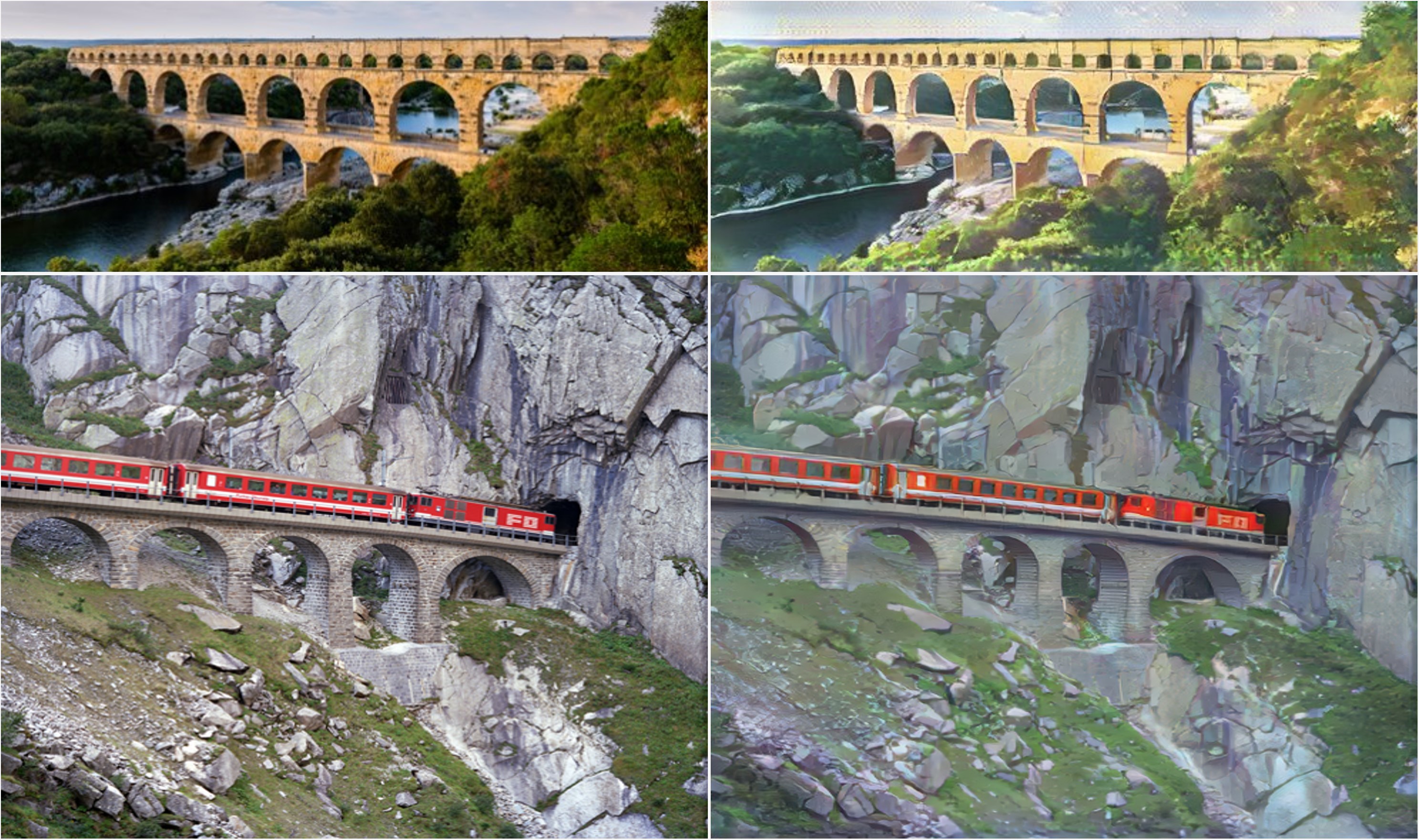

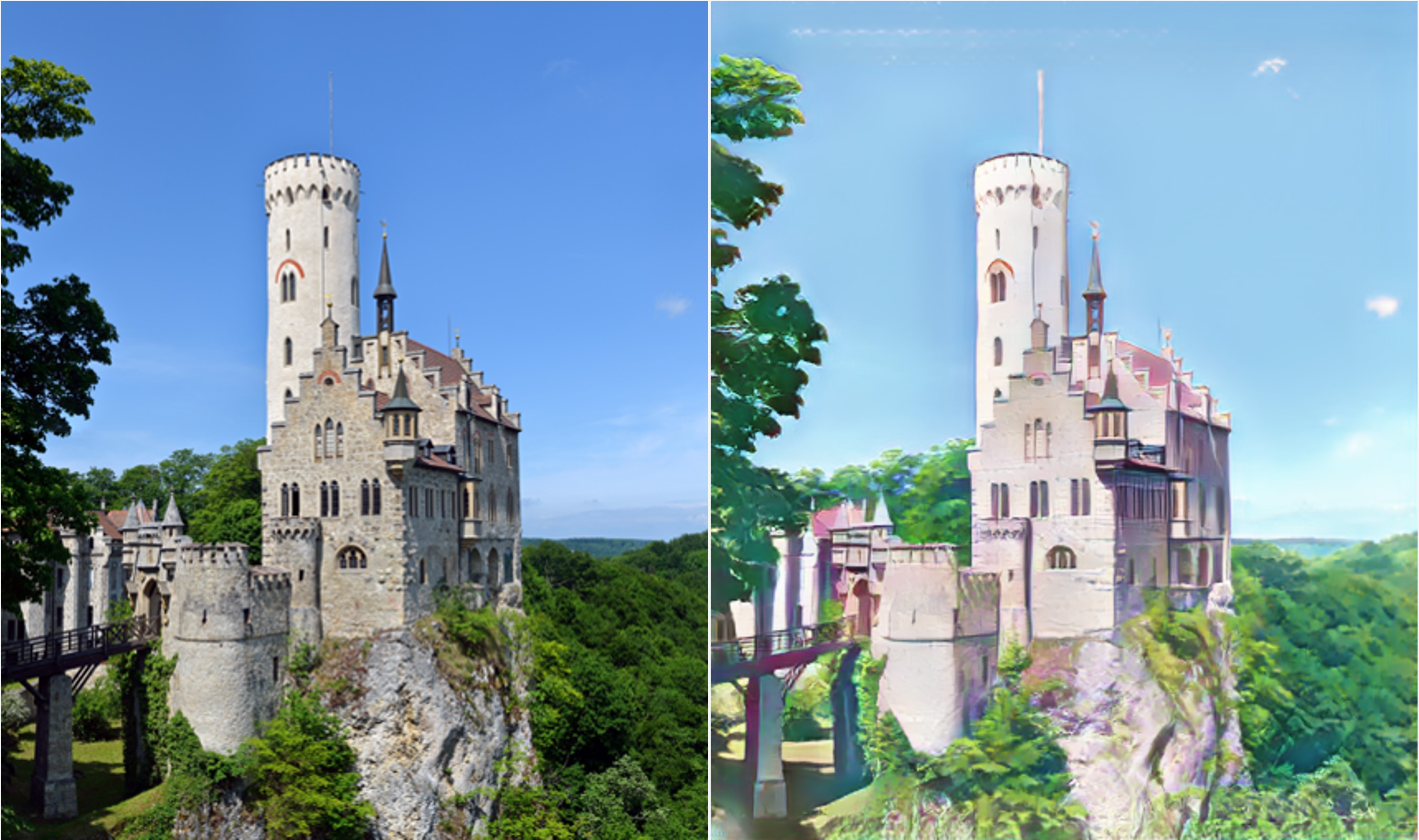

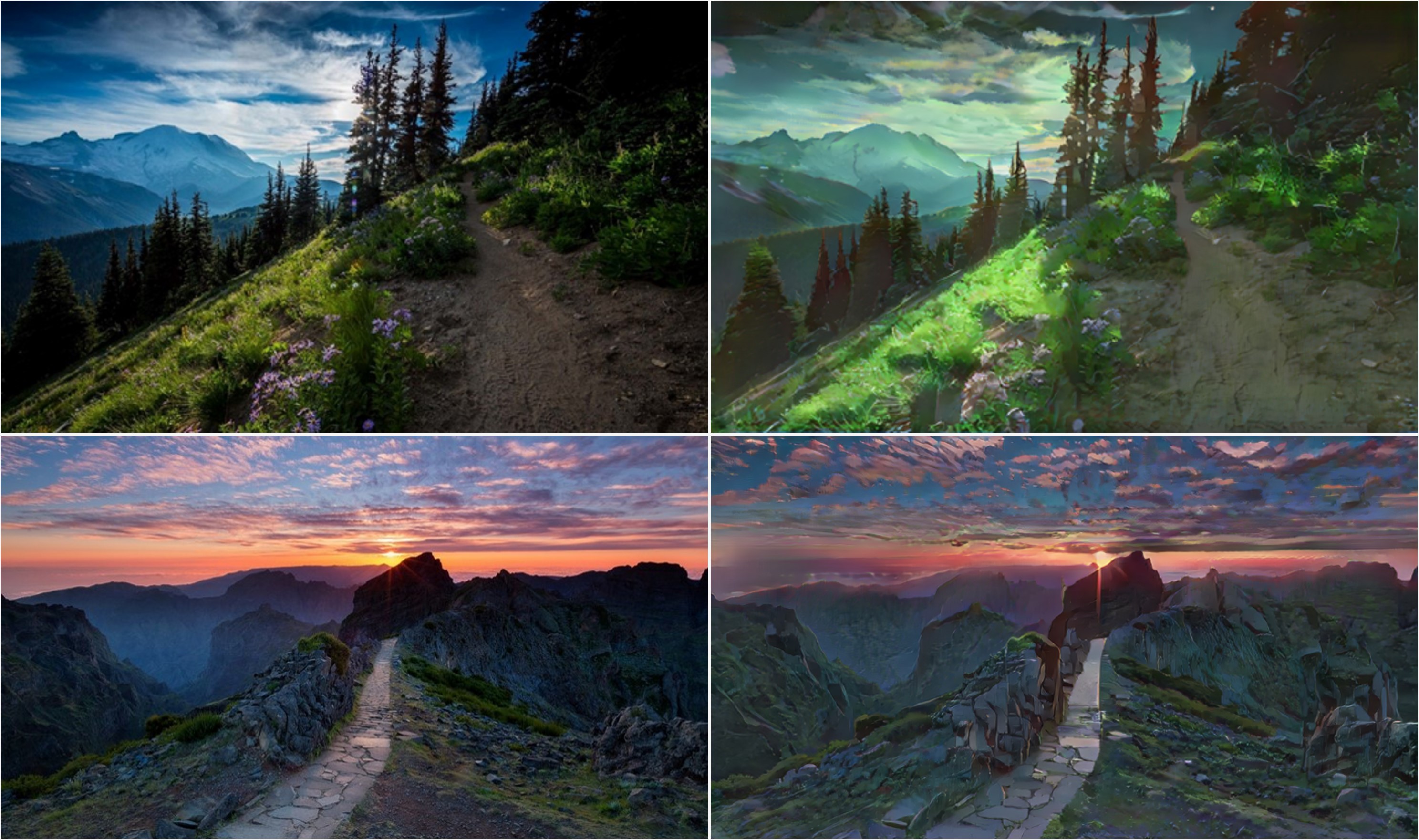

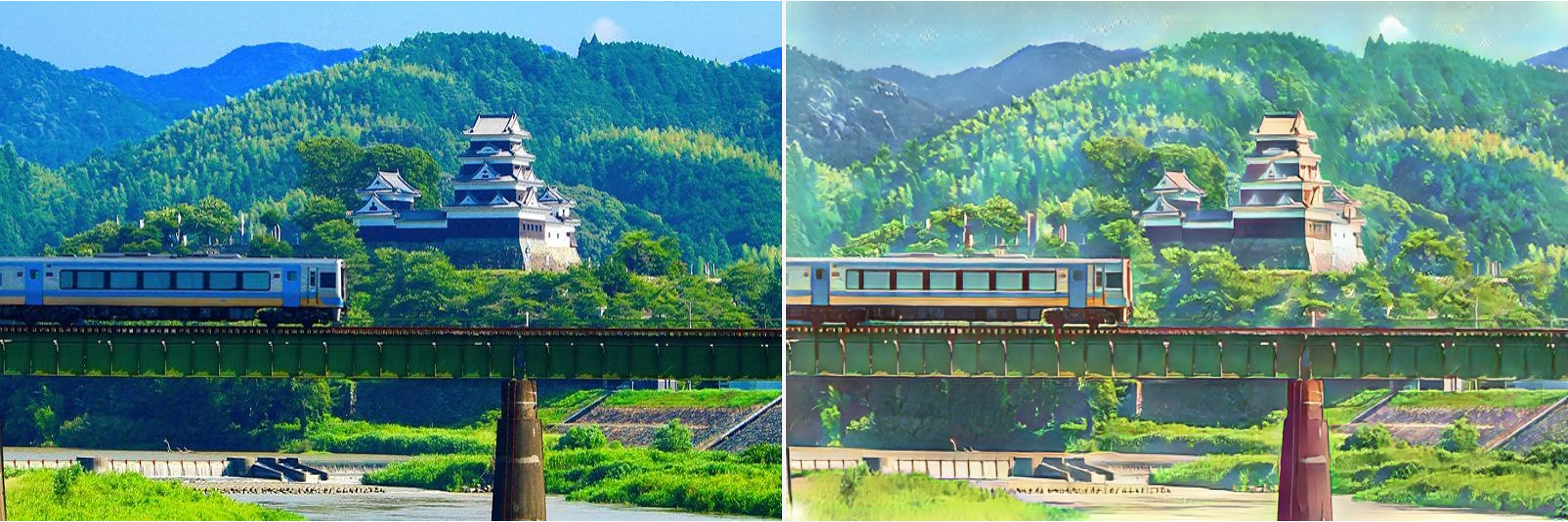

Automatic high-quality rendering of anime scenes from complex real-world images is of significant practical value. The challenges of this task lie in the complexity of the scenes, the unique features of anime style, and the lack of high-quality datasets to bridge the domain gap. Despite promising attempts, previous efforts are still incompetent in achieving satisfactory results with consistent semantic preservation, evident stylization, and fine details.

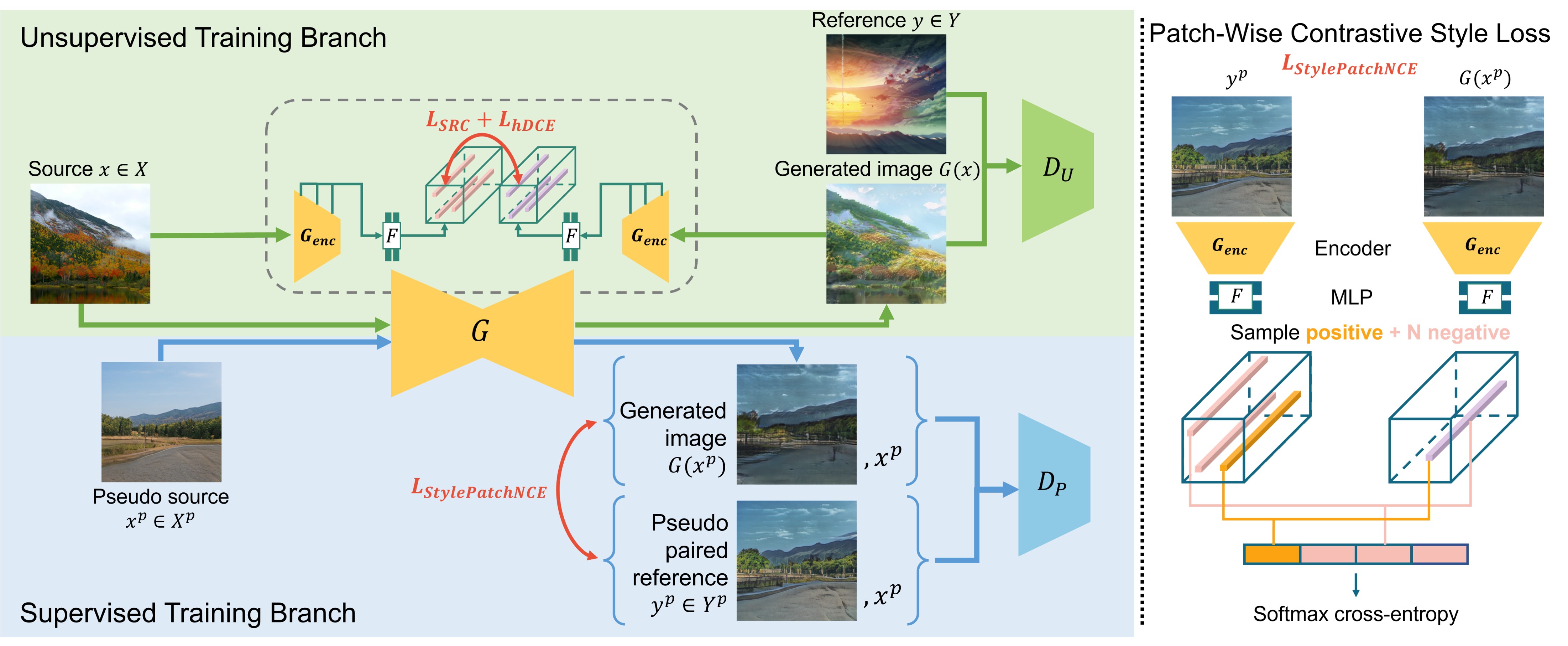

In this study, we propose Scenimefy, a novel semi-supervised image-to-image translation framework that addresses these challenges. Our approach guides the learning with structure-consistent pseudo paired data, simplifying the pure unsupervised setting. The pseudo data are derived uniquely from a semantic-constrained StyleGAN leveraging rich model priors like CLIP. We further apply segmentation-guided data selection to obtain high-quality pseudo supervision. A patch-wise contrastive style loss is introduced to improve stylization and fine details.

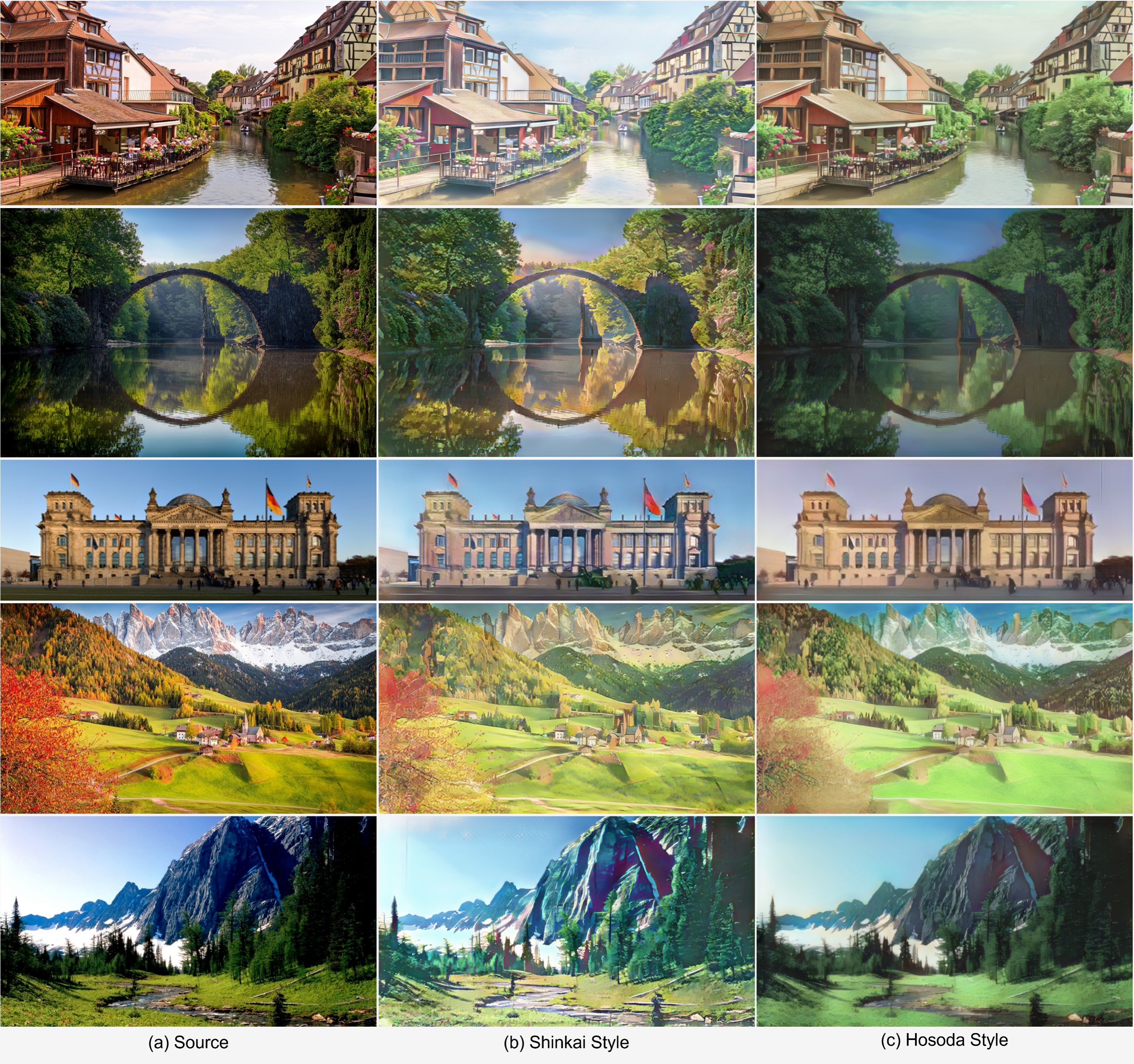

Besides, we contribute a high-resolution anime scene dataset to facilitate future research. Our extensive experiments demonstrate the superiority of our method over state-of-the-art baselines in terms of both perceptual quality and quantitative performance.

Our goal is to stylize natural scenes with fine-grained anime textures while preserving the underlying semantics. We formulate the proposed Scenimefy into a three stage pipeline: (1) paired data generation , (2) segmentation-guided data selection, and (3) semi-supervised image-to-image translation.

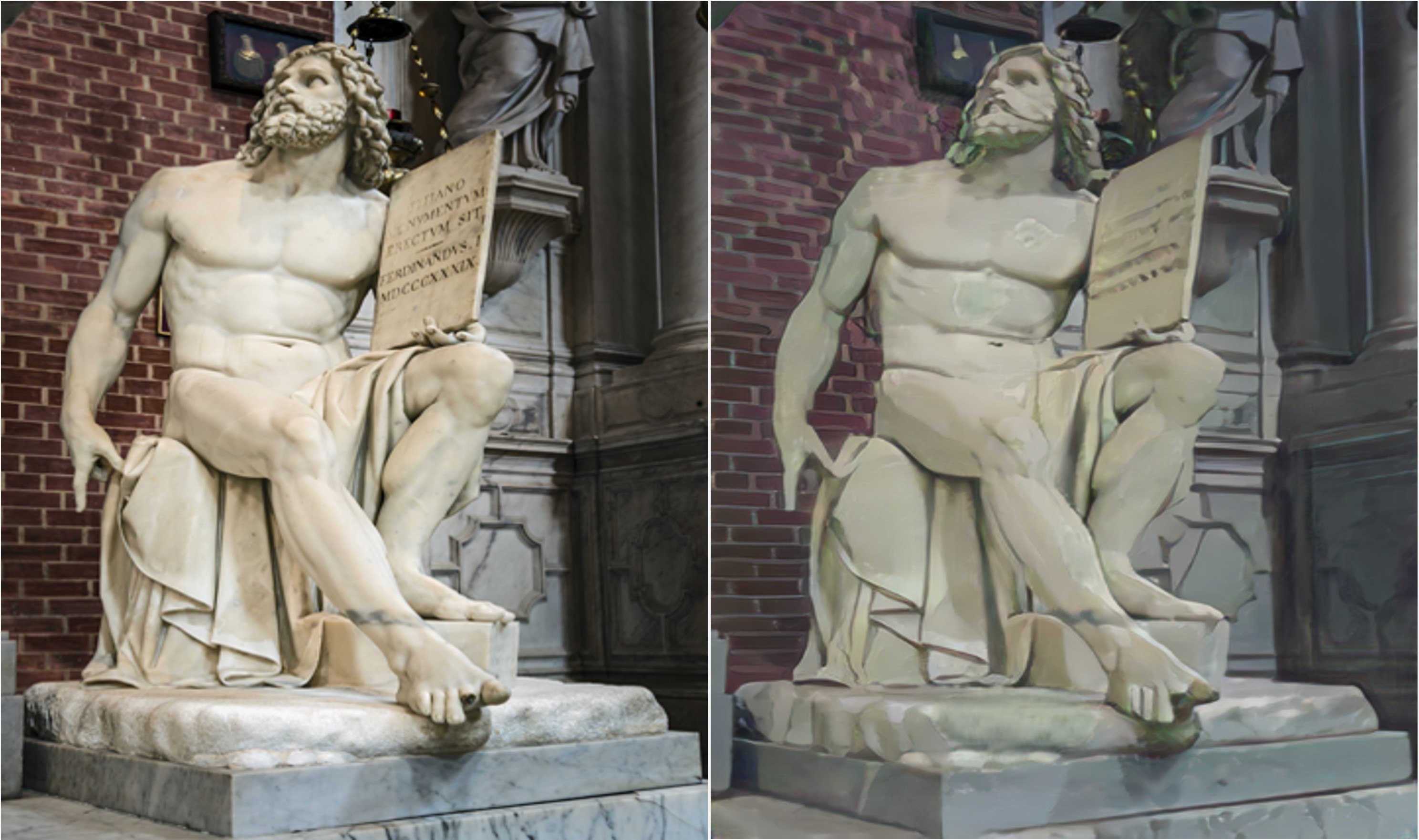

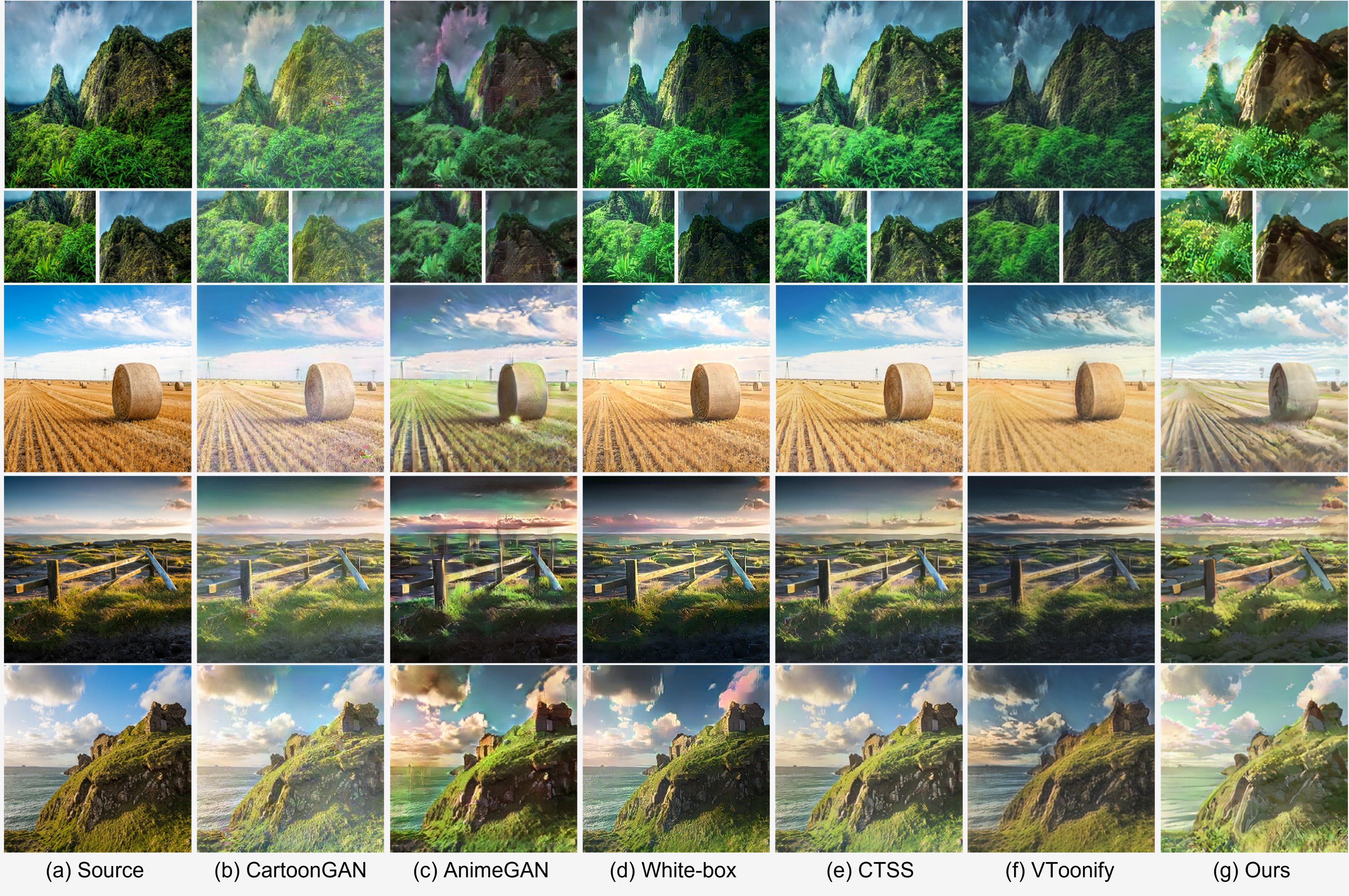

We compare our approach with five representative methods: 1) representative image-to-image translation methods customized for scene cartoonization, i.e., CartoonGAN, AnimeGAN, White-box, CTSS; 2) and the StyleGAN-based approach, i.e., VToonify. Scenimefy (ours) produces more semantic-consistent with significant anime-style textures compared to state-of-the-art baselines.

Our study also contributed a high-resolution (1080x1080) Shinkai-style pure anime scene dataset comprising 5,958 images with the following construction details:

@inproceedings{jiang2023scenimefy,

author = {Jiang, Yuxin and Jiang, Liming and Yang, Shuai and Loy, Chen Change},

title = {Scenimefy: Learning to Craft Anime Scene via Semi-Supervised Image-to-Image Translation},

booktitle = {ICCV},

year = {2023},

}